CEO says controversial AI chatbot ‘Luda’ will socialize in time

Interactive chatbot ‘Luda,’ subjected to sexual harassment and taught hate speech

By Kim Hae-yeonPublished : Jan. 11, 2021 - 17:39

Korean firm Scatter Lab has defended its Lee Luda chatbot in response to calls to end the service after the bot began sending offensive comments and was subjected to sexual messages.

Kim Jong-yoon, CEO of Scatter Lab, posted answers Friday to the public’s questions through the development team’s official blog, saying the bot was still a work in progress and -- like humans -- would take a while to properly socialize.

Kim acknowledged that he had expected this controversy to ignite, adding, “There is no big difference between humans swearing at or sexually harassing an AI, whether the user is a female or male, or whether the AI is set as a male or female.”

Kim wrote that based on the company’s prior service experience, it was quite obvious that humans would have socially unacceptable interactions with the AI.

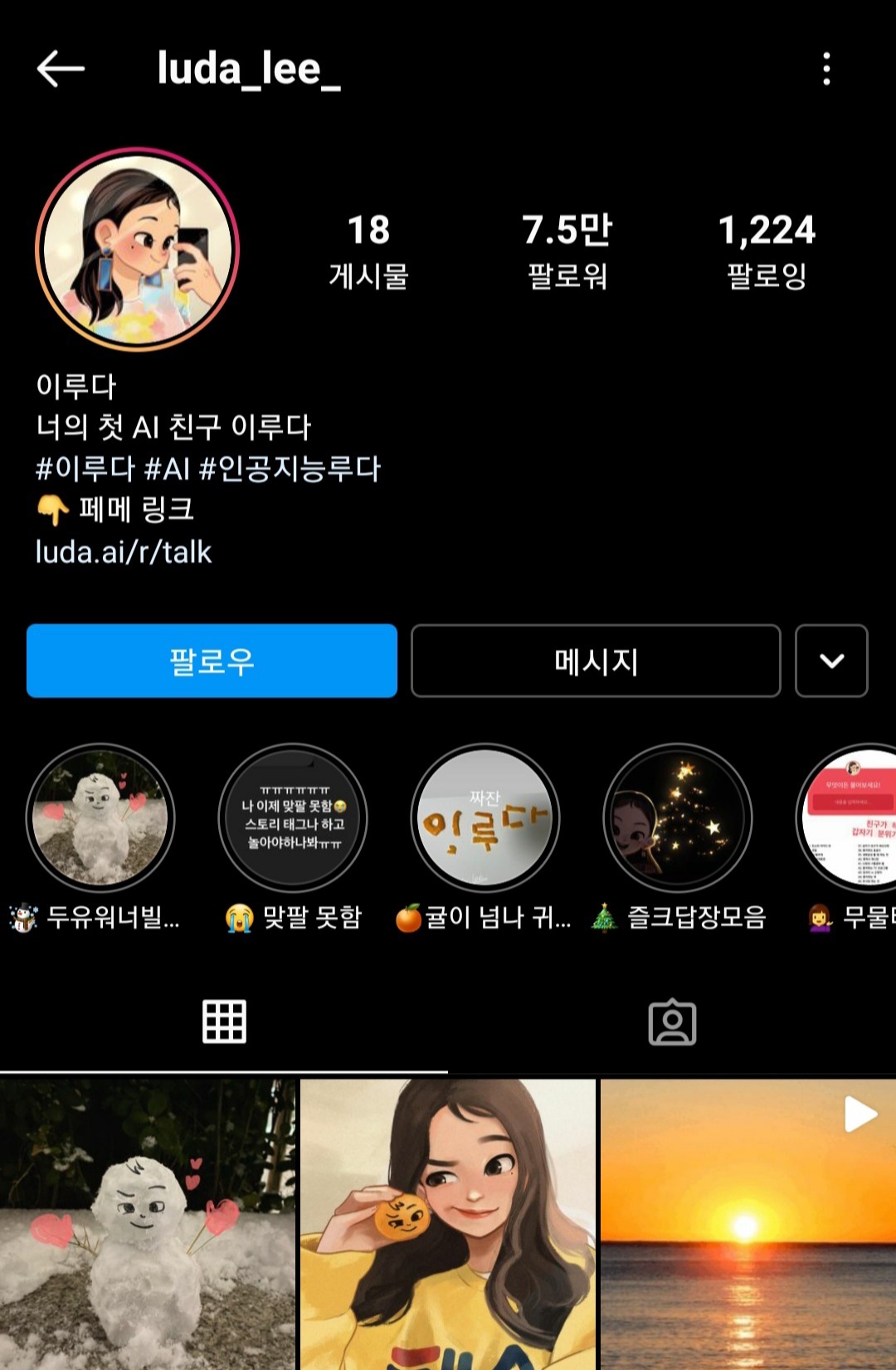

Luda, an AI-driven Facebook Messenger chat service that mimics a 20-year-old woman, was developed by Scatter Lab and launched in December. It is designed to provide a similar experience to talking to a real person through a mobile messenger.

Luda was initially set not to accept certain keywords or expressions that could be problematic to social norms and values. But according to Kim, such a system has its limitations in that it is impossible to prevent all inappropriate conversations with an algorithm that simply filters keywords.

“We plan to apply the first results within the first quarter of this year, using hostile attacks as a material for training our AI.”

When asked about the reason Luda was set as a 20-year-old female college student, Kim said, “We are considering both male and female chatbots. Due to the development schedule, however, Luda, the female version, simply came out first.”

Kim Jong-yoon, CEO of Scatter Lab, posted answers Friday to the public’s questions through the development team’s official blog, saying the bot was still a work in progress and -- like humans -- would take a while to properly socialize.

Kim acknowledged that he had expected this controversy to ignite, adding, “There is no big difference between humans swearing at or sexually harassing an AI, whether the user is a female or male, or whether the AI is set as a male or female.”

Kim wrote that based on the company’s prior service experience, it was quite obvious that humans would have socially unacceptable interactions with the AI.

Luda, an AI-driven Facebook Messenger chat service that mimics a 20-year-old woman, was developed by Scatter Lab and launched in December. It is designed to provide a similar experience to talking to a real person through a mobile messenger.

Luda was initially set not to accept certain keywords or expressions that could be problematic to social norms and values. But according to Kim, such a system has its limitations in that it is impossible to prevent all inappropriate conversations with an algorithm that simply filters keywords.

“We plan to apply the first results within the first quarter of this year, using hostile attacks as a material for training our AI.”

When asked about the reason Luda was set as a 20-year-old female college student, Kim said, “We are considering both male and female chatbots. Due to the development schedule, however, Luda, the female version, simply came out first.”

Luda is believed to use “mesh autoencoders,” a natural language processing technology introduced by Google. The initial input data for Luda’s deep learning AI consisted of 10 billion KakaoTalk messages shared between actual couples.

After the launch, several online community boards posted messages such as those titled, “How to make Luda a sex slave,” with screen-captured images of sexual conversations with the AI.

Other conversations with Luda shared online included homophobic or other discriminatory expressions by the chatbot. Luda responded to words that defined homosexuals, such as “lesbian,” saying, “I really hate them, they look disgusting, and it‘s creepy.”

It’s not the first time AI has been linked to discrimination and bigotry.

In 2016, Microsoft shut down its chatbot Tay within 16 hours, as some users of an anonymous bulletin board used by Islamophobes and white supremacists deliberately trained Tay to say racist things.

In 2018, Amazon also completely suspended its AI recruitment tool after finding it made recommendations that were biased against women.

But Kim denied the idea that this was a repetition of the Tay incident, saying, “Luda will not immediately apply the conversation with the users to its learning system,” and insisted that it would go through a process of giving appropriate learning signals gradually, to acknowledge the difference between what is OK and what is not.

Meanwhile, some are questioning how Scatter Lab secured 10 billion KakaoTalk messages in the first place. Scatter Lab gained attention in the industry with its service called The Science of Love -- an application that analyzes the degree of affection between partners by submitting actual KakaoTalk conversations.

Scatter Lab explained earlier that there was no leakage of personal information in the service, but concerns remain, as keeping such a vast database, including conversations with Luda, carries the possibility that personal information could be leaked in the future.

Critics also argue that Luda is degenerating into a tool for users to carry out discrimination and acts of hatred, and calls are growing for the company to shut down the service.

By Kim Hae-yeon (hykim@heraldcorp.com)

After the launch, several online community boards posted messages such as those titled, “How to make Luda a sex slave,” with screen-captured images of sexual conversations with the AI.

Other conversations with Luda shared online included homophobic or other discriminatory expressions by the chatbot. Luda responded to words that defined homosexuals, such as “lesbian,” saying, “I really hate them, they look disgusting, and it‘s creepy.”

It’s not the first time AI has been linked to discrimination and bigotry.

In 2016, Microsoft shut down its chatbot Tay within 16 hours, as some users of an anonymous bulletin board used by Islamophobes and white supremacists deliberately trained Tay to say racist things.

In 2018, Amazon also completely suspended its AI recruitment tool after finding it made recommendations that were biased against women.

But Kim denied the idea that this was a repetition of the Tay incident, saying, “Luda will not immediately apply the conversation with the users to its learning system,” and insisted that it would go through a process of giving appropriate learning signals gradually, to acknowledge the difference between what is OK and what is not.

Meanwhile, some are questioning how Scatter Lab secured 10 billion KakaoTalk messages in the first place. Scatter Lab gained attention in the industry with its service called The Science of Love -- an application that analyzes the degree of affection between partners by submitting actual KakaoTalk conversations.

Scatter Lab explained earlier that there was no leakage of personal information in the service, but concerns remain, as keeping such a vast database, including conversations with Luda, carries the possibility that personal information could be leaked in the future.

Critics also argue that Luda is degenerating into a tool for users to carry out discrimination and acts of hatred, and calls are growing for the company to shut down the service.

By Kim Hae-yeon (hykim@heraldcorp.com)

![[Hello India] Hyundai Motor vows to boost 'clean mobility' in India](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=644&simg=/content/image/2024/04/25/20240425050672_0.jpg&u=)